Research Article

Rotator Cuff Tears: A Systematic Review of Video Content on YouTube

4900

Views & Citations3900

Likes & Shares

Purpose: This study was performed to assess the quality of information on the multimedia website YouTube for rotator cuff tears.

Methods: YouTube was searched in January 2019 using the search terms, rotator cuff tear, rotator cuff injury, and torn rotator cuff for Search 1. Search 2 was conducted using the search terms, rotator cuff repair and rotator cuff surgery. Analysis was restricted to the first 25 results for each search term. Videos were evaluated by 2 independent reviewers using a novel scoring system organized into Diagnosis and Treatment sections (16 points each, total 32). One point was awarded for a video explaining a component of the scoring system. Video quality was compared between physician-sponsored vs. non-physician videos. The number of views per video was recorded and analyzed for its relationship to video quality.

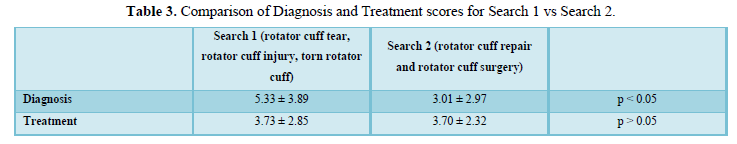

Results: Search 1 yielded 37 videos to evaluate. The mean quality scores were significantly (p=0.04) different, with 5.33 for Diagnosis and 3.73 for Treatment. Search 2 yielded 33 videos. The mean quality scores were 3.01 for Diagnosis and 3.7 for Treatment. Search 1 and Search 2 had similar treatment scores (p > 0.05) but Search 2 had a worse Diagnosis score than Search 1 (p = 0.005). Videos that included a physician-sponsor had significantly higher average total scores than videos without a physician-sponsor (11.1 vs 5.0, p = 0.03). The number of views per video was not significantly correlated with average total score (rs = - 0.23, p = .17).

Conclusion: The quality of patient-level information on YouTube for rotator cuff tears is incomplete, especially from non-physician sponsored videos. This review highlights the need for higher quality and comprehensive video information for patients on rotator cuff tears.

Level of Evidence: IV, Systematic Review

Keywords: Rotator cuff tears, Rotator cuff repair, patient education, YouTubea

INTRODUCTION

Rotator cuff pathology is the most common shoulder condition for which patients seek treatment [1]. Prevalence of rotator cuff abnormalities range from 9.7% in patients aged 20 years and younger to 62% in patients aged 80 years and older [2].

Increasingly, patients are turning to online resources to access health information, with 60% of adults reporting going online in the previous month for health information [3]. YouTube is the 2nd most popular website on the internet and is an increasingly popular medium for healthcare information [4]. It allows industry, healthcare providers, educators, and consumers to freely deliver engaging multimedia content in an easily accessible format.

Although YouTube provides a user-friendly platform to access and share information, healthcare information is not peer-reviewed prior to uploading on the site. Several studies have shown that the quality of healthcare information on YouTube is often variable and at times inaccurate or misleading [5-7]. Recent studies on rotator cuff tear education on websites found poor and incomplete information, and presents a need for improvement in the quality of information presented to patients [8-9].Without adequate assessment of video information on YouTube for rotator cuff tears, patients’ expectations and knowledge about their diagnosis and treatment can be negatively impacted [10].

Given the heightened utilization of YouTube as a healthcare education medium, physicians ought to be aware of the quality of information patients are accessing about rotator cuff tears. This study attempts to evaluate the quality of both diagnostic and treatment information regarding rotator cuff tears in YouTube videos and we hypothesize that the quality of information available will be incomplete.

MATERIALS AND METHODS

Search strategy

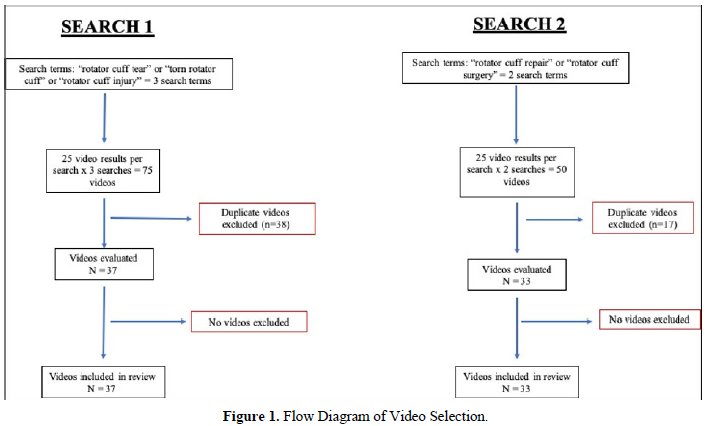

YouTube was searched based on terms patients would most likely to use to find information about rotator cuff tears. Search 1 was conducted in January 2019 for videos containing the following search terms: (1) rotator cuff tear (2) rotator cuff injury, and (3) torn rotator cuff. The search was performed by 2 reviewers (blinded for review) on the same day by accessing www.youtube.com via an Incognito window on a Google Chrome browser from an IP address in Vail, Colorado. Video urls were recorded from the top 25 results from each search, for a total of 75 results. Duplicate videos were then excluded. Exclusion criteria were non-English language videos or those unrelated to rotator cuff pathology. In order to address potential search term bias, an additional search (Search 2) was conducted on YouTube with more of a treatment focus, with the search terms (1) rotator cuff repair and (2) rotator cuff surgery. The same exclusion criteria for Search 1 were applied for Search 2. Search methodology is shown Figure 1. Our study was exempt from institutional review by our board of ethics due to use of public access data alone.

Video evaluation

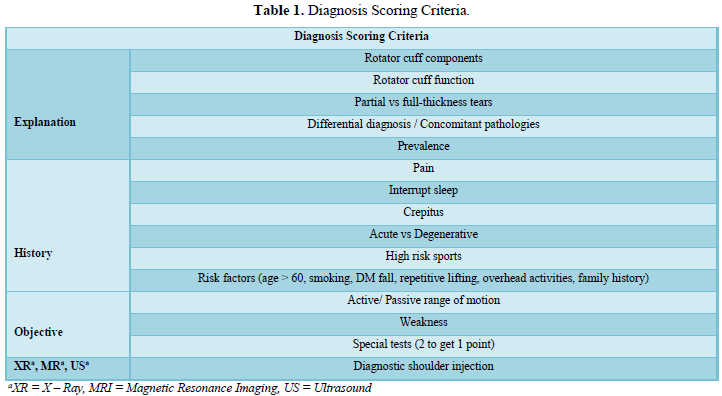

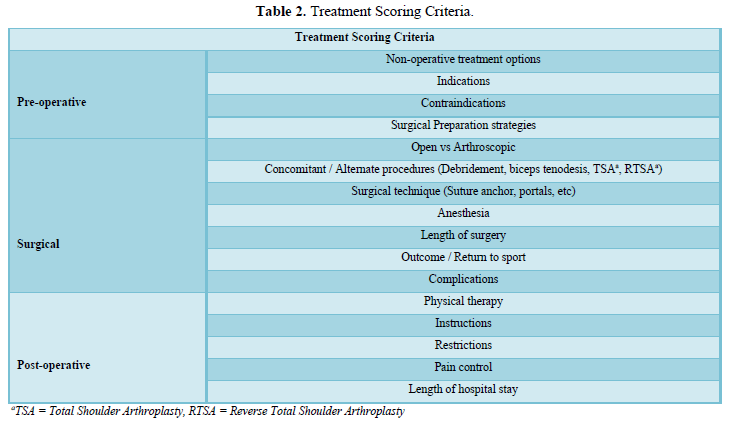

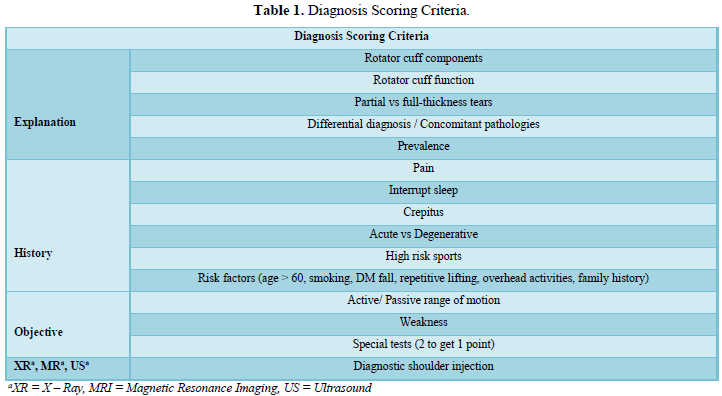

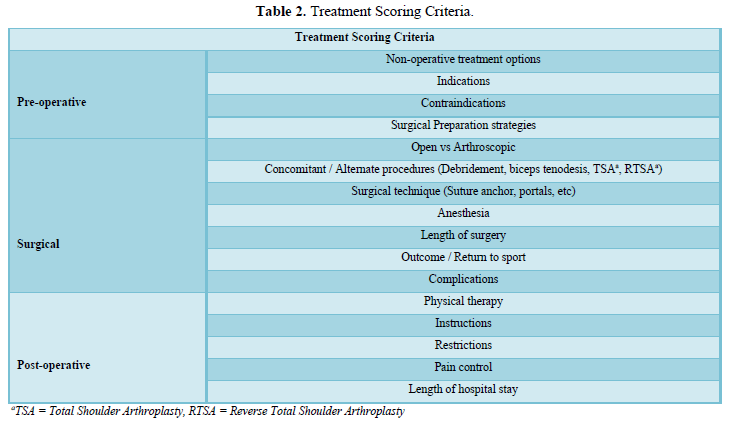

After establishing the final list of videos, video information was independently scored by two medically trained evaluators (blinded for review). In order to score the videos, an author-derived score was created. The components of the scoring system were created by fellowship trained orthopedic surgeons (blinded for review) and consisted of elements significant to the diagnosis and treatment of rotator cuff tears. The scoring system was divided into two sections: Diagnosis and Treatment. This approach was modeled after the scoring system created by Macleod et al to evaluate video quality regarding femoroacetabular impingement [7]. Each section was subdivided into three subsections. Explanation, history, and objective findings comprised the subsections within the Diagnosis section (Table 1). The Treatment section was divided into preoperative care, surgical technique, and postoperative care (Table 2). Individual criteria within each subsection were awarded one point for explanation within the video. A score of zero was awarded if no explanation or mention occurred. Each section (Diagnosis and Treatment) had a point total worth sixteen points, with the maximum score for a video being 32 points. Each reviewer scored the video twice to evaluate intra as well as inter-rater reliability. Scores from each reviewer were combined and averaged to generate mean quality scores for each video included.

Additionally, the videos were also categorized by authorship and categorized as physician-sponsored or non-physician sponsored. The number of times a video was viewed by the public was also recorded upon scoring a video for the first time by the first reviewer. If a video contained misinformation or misleading statements, it was noted by the raters and evaluated by a fellowship trained orthopedic surgeon (blinded for review).

STATISTICAL ANALYSIS

Statistical analysis was performed using R Studio. Independent sample t-tests for normally distributed data and Mann-Whitney U test for non-normally distributed data were used to determine differences in video quality and physician sponsorship. An ANOVA was calculated to determine quality differences between search terms. A Spearman rank correlation coefficient for non-normally distributed data was used to determine the association between video quality and number of views, and the order in which a video appeared after a search. Interclass correlation (ICC) for intra and interrater with absolute agreement and Single Measure Intraclass Correlation was performed on 20 randomly assigned patients. The ICC values were graded using the scale described by Fleiss et al. (excellent reliability (0.75 > ICC ≤ 1.00), fair to good reliability (0.40 ≥ ICC ≤ 0.75), poor reliability (0.00 ≥ ICC ≤ 0.40) [11]. A p-value of less than 0.05 was used to determine statistical significance. No funding was required for this study.

RESULTS

Using three separate search terms for Search 1, “rotator cuff tear,” “torn rotator cuff,” and “rotator cuff injury,” and collecting the first 25 results each search, the initial search yielded 75 videos. There were 38 duplicate videos that were removed, and zero videos were excluded. The final list consisted of 37 videos to evaluate (Figure 1). Rater 1 (blinded for review) had an intra-rater reliability of 0.969 for the Diagnosis section and 0.966 for the Treatment section. Rater 2 (blinded for review) had an intra-rater reliability of 0.952 for the Diagnosis section and 0.966 for the Treatment section. Inter-rater reliability for Diagnosis and Treatment sections were 0.952 and 0.966, respectively.

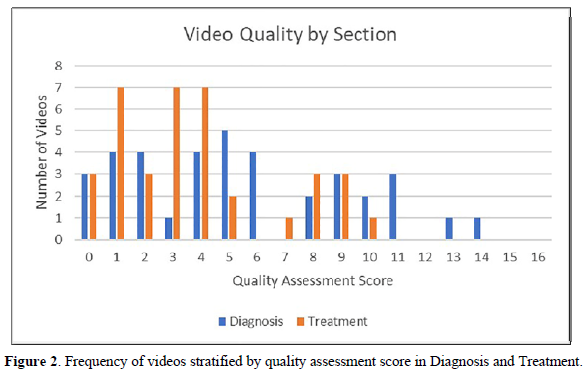

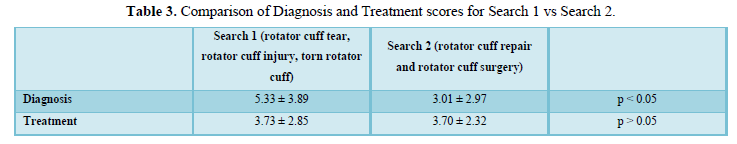

Out of 32 possible points, the average total score for the 37 videos of Search 1 was 9.07 ± .61. The average score for Diagnosis was 5.33 ± 3.89 (range, 0 13.5) and the average score for Treatment was 3.73 ± 2.85 (range, 0 – 9.5). The frequency distribution of the quality assessment score is shown in Figure 2. The difference between Diagnosis and Treatment was found to be statistically significant (p=0.04), possibly due to the focus on diagnosis in the search terms. Thus, an additional search (Search 2) was initiated with more of a focus on treatment. Search 2 was conducted with the search terms “rotator cuff repair” and “rotator cuff surgery.” The initial search yielded 50 videos. There were 17 duplicate videos that were removed, and no videos were excluded. There were 33 videos in Search 2 left to evaluate (Figure 1). The average total score for Search 2 was 6.71 ± 4.82 out of 32 possible points. The average score for Diagnosis was 3.01 ± 2.97(range, 0 – 11.25) and the average score for Treatment was 3.70 ± 2.32 (range, 0 – 9.5). Search 2 did not have a statistically significant better Treatment score than Search 1 (p > 0.05). However, search 2 did have a statistically significant worse diagnosis score compared to Search 1 (p = 0.005). A comparison of Treatment and Diagnosis scores from Search 1 and Search 2 can be found in Table 3.

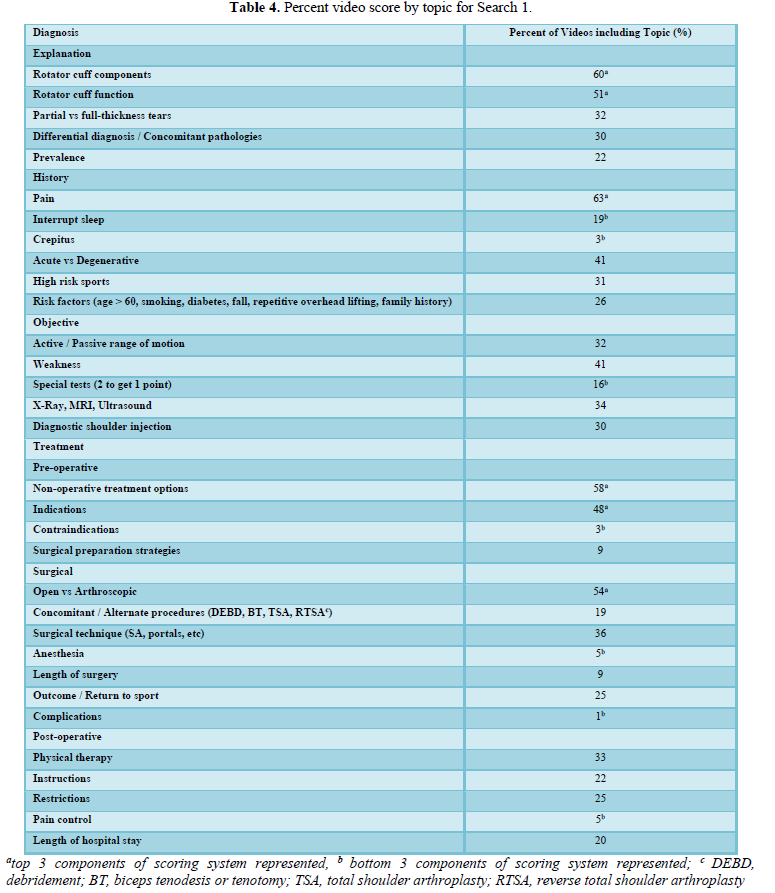

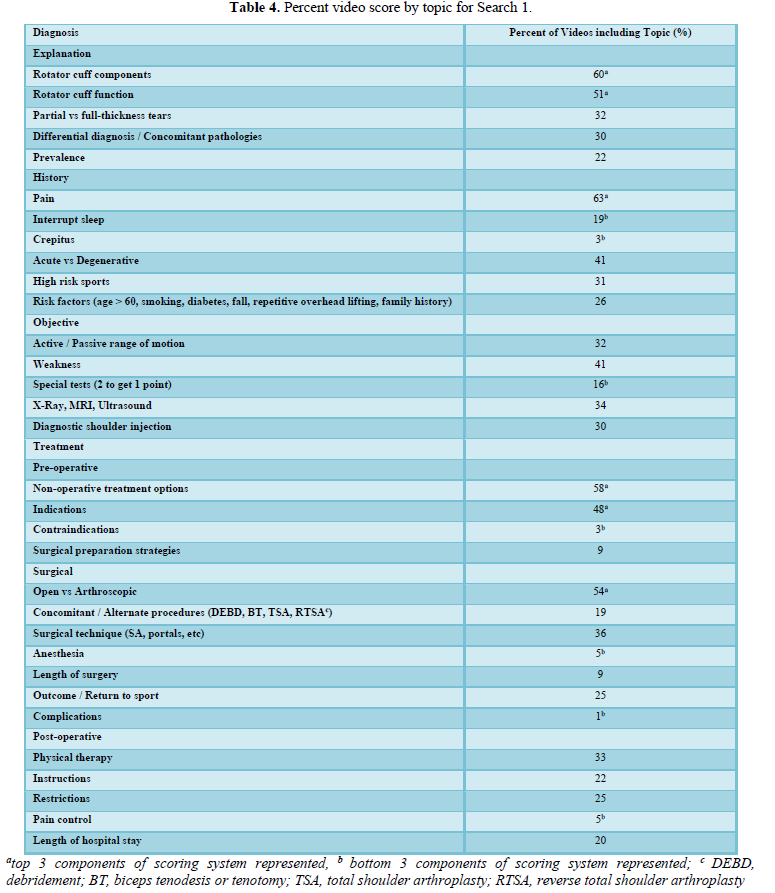

Further analysis was conducted for the results of the original search (Search 1) due to its higher score compared to Search 2. Based on the average scores for each subsection of Diagnosis, the videos met 40% of the explanation total, 29.5% of the history total, and 30.8% of the objective total. Based on the average scores for each subsection of Treatment, the videos met 29.5% of the pre-op total, 21.4% of the surgical total, and 20.9% of the post-op total. In the Diagnosis section, the three criteria with the highest percentage representation from all videos in descending order were “pain,” “rotator cuff components,” and “rotator cuff function.” The lowest criteria were “crepitus,” “special tests,” and “interrupt sleep. “In the treatment section, the three questions with the highest percentage representation from all videos in descending order were “non-operative treatment options,” “open vs arthroscopic,” and “indications.” The lowest criteria were “complications,” “contraindications,” “anesthesia,” and “pain control” (Table 4).

Video quality was not significantly correlated (p>0.05) with the order in which a video appeared for each of the five total search terms used. Videos that included a physician-sponsor had significantly higher average total scores than videos without a physician-sponsor (11.1 vs 5.0, p = 0.03). The number of views per video was not significantly correlated with average total score (rs = - 0.23, p = .17). There was no significant difference in total quality score between all five search terms (Search 1 and Search 2) (p > 0.05).

The raters noted three incidences of misinformation or misleading statements in two videos. One video selling a biologics product grossly misrepresented and fabricated research on rotator cuff surgery. Finally, one physical therapist sponsored video incorrectly identified high risk activities and indications for surgery.

DISCUSSION

This study is the first to evaluate the quality of rotator cuff tears and repair information on YouTube. The results of this study show that patients searching YouTube for information on rotator cuff tears will encounter content that is variable and incomplete, with the average total score for Search 1 being 9.07 and the average total score for Search 2 being 6.71. Videos with physician sponsorship had significantly higher scores than videos without physician sponsorship. Moreover, no significant correlation was found for video quality and number of views per video and for the order in which a video appeared after a search. A significant difference was found, however, between Diagnosis and Treatment subsections of the scoring criteria after Search 1. Thus, a second search (Search 2 – “rotator cuff repair” and “rotator cuff surgery”) was created to investigate if Search 1’s search terms (“rotator cuff tear,” “rotator cuff injury,” “torn rotator cuff,”) were biasing quality scores in favor of diagnosis. Search 1 did have a significantly higher score than Search 2 for Diagnosis (5.33 vs 3.01), but both searches had comparable Treatment scores (Search 1, 3.73 vs Search 2, 3.70), indicating that the search terms in Search 1 were more informative.

Previous studies have evaluated the quality of YouTube information for different medical specialties including internal medicine[12-17], otolaryngology [18-19] urolog [20], and neurology [21-22] Within orthopedics, the consensus in previous studies has been that the available information is insufficient and of poor quality.[6,7,23-27] This study is especially important given the popularity of searching the Internet for health information [28-30] and rotator cuff disease being the most common disability of the shoulder [31]. Furthermore, the present study is relevant for both patients and physicians, as it can provide a basis for physicians to inform patients of the incomplete information, they can encounter regarding rotator cuff tears on YouTube.

Although the history subsection of Diagnosis in Search 1 scored lowest, “pain” was the highest scoring component of the entire diagnosis scoring criteria, with 63% of videos explaining it as a symptom of a rotator cuff tear. However, other symptoms indicative of a rotator cuff tear such as “interrupt sleep” and “crepitus” were among the least explained points. This deficiency, coupled with the lack of videos explaining “special tests” for diagnosing a tear (16% of videos) and “differential diagnosis / concomitant pathologies (30% of videos),” may falsely lead patients into thinking their symptoms come from a rotator cuff tear when in fact the differential is extensive.

Overall, Treatment as a whole scored significantly lower than Diagnosis for Search 1. While many videos appropriately discussed possible “non-operative treatment options” and “open vs arthroscopic” approaches, “contraindications” and “complications” were only mentioned in an average of 1 and 0.5 videos, respectively. These results are similar to the findings reported in a similar study on femoro acetabular impingement by MacLeod et al. [7]. Furthermore, “contraindications” and “complications” were not explained in any new videos after Search 2 was conducted. The lack of discussion of information relevant to treatment of rotator cuff tears can impact patients’ understanding and expectations of treatment, especially in situations where a patient-physician relationship has not been well established.

This study also highlights the superiority of physician created videos and the discrepancy between video quality and number of views a video received. The two highest scoring videos were lectures by orthopedic surgeons (‘Rotator Cuff Tears Kristofer Jones, MD – UCLA Health’ and ‘Rotator Cuff Tears: Do You Need Surgery’). However, they ranked 17th and 31st respectively in number of views. This could be explained by the length of the videos, as they both lasted over twenty minutes, and the lack of animation or patient testimonial, two factors that were included in videos with the most views.

YouTube is a ubiquitous platform that has incredible potential to educate and empower patients. The immediate availability and ease of use means that many patients can explore online resources prior to seeing a care provider. Moreover, patients are increasingly trusting of information that they are finding on the internet [3]. Early exposure in combination with perceived trustworthiness by the patient can impact a patients’ expectations about their diagnosis and treatment. For this reason, physicians and other sports medicine care providers would be well-served by directing patients to high quality content such as that disseminated by the American Academy of Orthopedic Surgeons [32,33] or generating their own high-quality, comprehensive content. Based on the results of this study, patients should be looking for physician sponsored videos in order to obtain accurate, succinct information.

LIMITATIONS

There are several limitations that must be mentioned in the context of the presented results. No validated scoring criteria exists for the review of online video information. However, the scoring criteria created for this study was adapted from a similar criterion created by MacLeod [7], and has been followed similarly by prior studies [5,6] Additionally, the scoring criteria had an excellent intra and inter-rater reliability, which demonstrates the consistency of the grading scale and grading by authors. Another limitation of this study could stem from limiting the scored videos to the first 25 results. However, Eysenbach [34] showed that Internet users are most likely to consume the first 10 results. This study was also limited by the single time point and location used to search videos. YouTube’s algorithm for search results varies by geographic location and the time at which the search was conducted, which can result in variable search results. Furthermore, a patient’s YouTube video viewing may follow a pattern whereby a result is selected from a search and the next video is chosen from “related videos” on the same webpage, rather than returning to original search results [4]. Our study did not account for this habit. Finally, our analysis was limited to videos accessed only through YouTube and excluded videos that could be accessed from other websites.

CONCLUSION

The quality of patient-level information on YouTube for rotator cuff tears is incomplete, especially from non-physician sponsored videos. This review highlights the need for higher quality and comprehensive video information for patients on rotator cuff tears.

- McFarland EG, Tanaka MJ, Papp DF (2008) Examination of the shoulder in the overhead and throwing athlete. Clin Sports Med 27(4): 553-578.

- Teunis T, Lubberts B, Reilly BT, Ring D (2014) A systematic review and pooled analysis of the prevalence of rotator cuff disease with increasing age. J Shoulder Elbow Surg 23(12): 1913-1921.

- Taylor H (2011) The Growing Influence and Use of Health Care Information Obtained Online Harris Interactive. Accessed on: September 15, 2011. Available online at: https://www.prnewswire.com/news-releases/the-growing-influence-and-use-of-health-care-information-obtained-online-129893198.html

- Sampson M, Cumber J, Li C, Pound CM, Fuller A, et al. (2013) A systematic review of methods for studying consumer health YouTube videos, with implications for systematic reviews. PeerJ 1: e147.

- Cassidy JT, Fitzgerald E, Cassidy ES, Cleary M, Byrne DP, et al. (2018) YouTube provides poor information regarding anterior cruciate ligament injury and reconstruction. Knee Surg Sports Traumatol Arthrosc 26(3): 840-845.

- Koller U, Waldstein W, Schatz KD, Windhager R (2016) YouTube provides irrelevant information for the diagnosis and treatment of hip arthritis. Int Orthop 40(10): 1995-2002.

- MacLeod MG, Hoppe DJ, Simunovic N, Bhandari M, Philippon MJ, et al. (2015) YouTube as an information source for femoroacetabular impingement: a systematic review of video content. Arthroscopy 31(1): 136-142.

- Dalton DM, Kelly EG, Molony DC (2015) Availability of accessible and high-quality information on the Internet for patients regarding the diagnosis and management of rotator cuff tears. J Shoulder Elbow Surg 24: e135-140.

- Goldenberg BT, Schairer WW, Dekker TJ, Lacheta L, Millett PJ (2019) Online Resources for Rotator Cuff Repair: What are Patients Reading? Arthroscopy, Sports Medicine, and Rehabilitation 1(1):85-92.

- Madathil KC, Rivera-Rodriguez AJ, Greenstein JS, Gramopadhye AK (2015) Healthcare information on YouTube: A systematic review. Health Informatics J 21(3): 173-194.

- Fleiss J (1986) The Design and Analysis of Clinical Experiments. John Wiley Sons, New York.

- Pandey A, Patni N, Singh M, Sood A, Singh G (2010) YouTube as a source of information on the H1N1 influenza pandemic. Am J Prev Med 38(3): e1-e3.

- Pant S, Deshmukh A, Murugiah K, Kumar G, Sachdeva R, et al. (2012) Assessing the credibility of the "YouTube approach" to health information on acute myocardial infarction. Clin Cardiol 35(5): 281-285.

- Camm CF, Sunderland N, Camm AJ (2013) A quality assessment of cardiac auscultation material on YouTube. Clin Cardiol 36(2): 77-81.

- Sunderland N, Camm CF, Glover K, Watts A, Warwick G (2014) A quality assessment of respiratory auscultation material on YouTube. Clin Med (Lond) 14(4): 391-395.

- Loeb S, Sengupta S, Butaney M, et al. (2019) Dissemination of Misinformative and Biased Information about Prostate Cancer on YouTube. Eur Urol 75(4): 564-567.

- Stellefson M, Chaney B, Ochipa K, Chaney D, Haider Z, et al. (2014) YouTube as a source of chronic obstructive pulmonary disease patient education: a social media content analysis. Chron Respir Dis 11(2): 61-71.

- Borgersen NJ, Henriksen MJ, Konge L, Sorensen TL, Thomsen AS, et al. (2016) Direct ophthalmoscopy on YouTube: Analysis of instructional YouTube videos' content and approach to visualization. Clin Ophthalmol 10: 1535-1541.

- Sorensen JA, Pusz MD, Brietzke SE (2014) YouTube as an information source for pediatric adenotonsillectomy and ear tube surgery. Int J Pediatr Otorhinolaryngol 78(1): 65-70.

- Sood A, Sarangi S, Pandey A, Murugiah K (2011) YouTube as a source of information on kidney stone disease. Urology 77(3): 558-562.

- Kerber KA, Burke JF, Skolarus LE, Callaghan BC, Fife TD, et al. (2012) A prescription for the Epley maneuver: www.youtube.com. Neurology 79(4): 376-380.

- Heathcote LC, Pate JW, Park AL, Leake HB, Moseley GL, et al. (2019) Pain neuroscience education on YouTube. PeerJ 7: e6603.

- Fischer J, Geurts J, Valderrabano V, Hugle T (2013) Educational quality of YouTube videos on knee arthrocentesis. J Clin Rheumatol 19(7): 373-376.

- Ovenden CD, Brooks FM (2018) Anterior Cervical Discectomy and Fusion YouTube Videos as a Source of Patient Education. Asian Spine J 12(6): 987-991.

- Brooks FM, Lawrence H, Jones A, McCarthy MJ (2014) YouTube as a source of patient information for lumbar discectomy. Ann R Coll Surg Engl 96(2): 144-146.

- Erdem MN, Karaca S (2018) Evaluating the Accuracy and Quality of the Information in Kyphosis Videos Shared on YouTube. Spine (Phila Pa 1976) 43(22): E1334-E1339.

- Addar A, Marwan Y, Algarni N, Berry G (2017) Assessment of "YouTube" Content for Distal Radius Fracture Immobilization. J Surg Educ 74(5): 799-804.

- Marton C, Wei Choo C (2012) A review of theoretical models of health information seeking on the web. emarld insight 68(3): 330-352.

- Stevenson FA, Kerr C, Murray E, Nazareth I (2007) Information from the Internet and the doctor-patient relationship: the patient perspective: A qualitative study. BMC Fam Pract 8(1): 47.

- Kivits J (2006) Informed patients and the internet: A mediated context for consultations with health professionals. J Health Psychol 11(2): 269-282.

- Tashjian RZ (2012) Epidemiology, natural history, and indications for treatment of rotator cuff tears. Clin Sports Med 31(4): 589-604.

- Armstrong AD WJ, Athwal GS (2017) Rotator Cuff Tears: Surgical Treatment Options. American Academy of Orthopaedic Surgeons. Accessed on: March, 2017. Available online at: https://orthoinfo.aaos.org/en/treatment/rotator-cuff-tears-surgical-treatment-options/

- Athwal GS AA (2017) Rotator Cuff Tears.American Academy of Orthopaedic Surgeons. Accessed on: March, 2017. Available online at: https://orthoinfo.aaos.org/en/diseases-conditions/rotator-cuff-tears/

- Eysenbach G, Kohler C (2002) How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. BMJ 324: 573-577.

QUICK LINKS

- SUBMIT MANUSCRIPT

- RECOMMEND THE JOURNAL

-

SUBSCRIBE FOR ALERTS

RELATED JOURNALS

- Advance Research on Endocrinology and Metabolism (ISSN: 2689-8209)

- Journal of Neurosurgery Imaging and Techniques (ISSN:2473-1943)

- Chemotherapy Research Journal (ISSN:2642-0236)

- International Journal of Radiography Imaging & Radiation Therapy (ISSN:2642-0392)

- Journal of Blood Transfusions and Diseases (ISSN:2641-4023)

- International Journal of Internal Medicine and Geriatrics (ISSN: 2689-7687)

- Journal of Cancer Science and Treatment (ISSN:2641-7472)